AI therapy and the illusion of healing

The dark shadow of using AI for therapy and what it's actually costing us.

I considered many ways to open this essay: quoting relevant research, alluding to theory, and other clever ways of avoiding the core of the issue. But the truth is simple:

If you’re using AI models for therapy, I’m scared for us.

And it’s not because I’m nowhere near making back the ≈£60k I invested in retraining as a psychotherapist over the last five years. Although it might look like AI could replace us, I actually don’t think many therapists’ jobs are at risk (I’ll explain why later in this essay). I’m not competing with AI nor am I against it. I use it too. But I am worried (and sad and slightly horrified) of what happens when we believe that chatting to LLM models like ChatGPT can ever be a replacement for therapy.

This essay is not about shaming you if you use ChatGPT. And I’m equally not interested in making a virtue out of being in therapy. The implications I want to explore are primarily ethical and philosophical—the very things we don’t think about when we’re given access to a free tool that promises to shortcut some of our daily demands, without reading the instruction manual (because there isn’t one).

So, as a therapist, a longterm therapy client, an AI user, and a human who generally loves innovation and technology, here’s why I think you’re at risk when you rely on AI models for therapy.

💫 I'm currently enrolling for a new 8-week online Dream Circle, running from 27 May to 15 July, on Tuesday evenings from 7–9pm BST 💫

This small, intimate group is a space to explore dreams in depth using a Jungian and imaginal lens. We’ll work with personal and collective symbolism, embodied reflection, and creative practices, with space for each participant to bring their own dream material. If you're longing to deepen your relationship to yourself and be part of a supportive community of real, feeling humans, get in touch by responding to this email.

Why do we go to therapy?

There are a myriad reasons that can land us in a therapist’s office. But sooner or later, most of themes lead back to the same core issue: at some point, the people we most depended on hurt us or weren’t there when we needed them.

When these people were our parents, the relational wounds also come with some developmental gaps. Entire parts of our brains may not fully develop because no one was there to facilitate the process. We might not know how to feel our feelings or self-regulate, leading to chaotic emotional lives or feeling cut-off from others and ourselves. We might not be able to fully perceive others as subjective beings and therefore struggle to tolerate it when our expectations aren’t met. Or we might not know how to apologise, how to ask for help, how to communicate what we need, or that we even have needs at all.

Such developmental lacks are accompanied by strong protective strategies we established as children, which now hinder us from having meaningful lives and relationships as adults. We might hate ourselves, self-isolate, overwork, overeat, become addicted, or sabotage what’s good in our lives. Most of the time we won’t understand why we’re doing it or even be aware it’s happening. The pain of unconsciously maintaining these self-protective strategies is what often lands us in therapy.

A common saying goes: we’re wounded in relationship and we heal in relationship. Therapy works not only because of all the theory that underpins the therapist’s interventions or their clever insights. It works because, at its core, it provides a safe, nurturing relationship where all these issues can play out and heal at the pace they need to.

Mutuality, relationship, and the why we need human therapists

“Psychotherapy must remain an obstinate attempt of two people to recover the wholeness of being human through the relationship between them.”

- R.D. Laing in The Politics of Experience

For some of us, it takes a while to realise that healing happens in relationship. At the start of our therapeutic journey, we often focus exclusively on eradicating the pain of our protective strategies. The therapist is seen as an expert with tools and strategies, not a representative of the other. We’re there because we want to understand what’s happening to us, but only so we can fix it—if possible, by EOD.

Understanding and insights aren’t bad. That’s where most people begin with therapy and why seeking support from the likes of ChatGPT can feel helpful. In some cases, trained AI models could probably successfully replace CBT therapists, offering brief interventions and coping strategies. They may help with key processes like mentalisation or increasing self-awareness. And, due to people’s perception of the machine’s lack of judgement, they may allow for quicker results due to the clients’ honest disclosures.

But there are strong limitations with such cognitive models. Your AI chatbot may be smarter than your average therapist, but it lacks embodiment and feeling. It can’t pick up on subtle queues, like when you stop breathing when you talk about your partner’s anger. It cannot see that you’re suppressing tears. It can’t feel that, despite smiling through your trauma story, your nervous system has shifted into a freeze state. And because of this, it may drop a powerful insight or intervention when you aren’t ready to receive it.

This lack of attunement cannot be compensated for through mere intelligence—it requires lived experience. This is why most therapists are people who have suffered immensely themselves and made it (somewhat) to the other side. A therapist who has worked through their suffering can hold you in yours, without the need for insights or solutions. They will be a guide, but never do the work for you. You will mostly heal through their consistent presence and their ability to form a safe, meaningful relationship with you.

I believe that what most of us long for and desperately need these days isn’t more understanding, it’s this kind of mutuality. We long to be experienced by another. We want to feel seen, felt, mirrored, and responded to. And we also long to feel that who we are has the power to move another. We long for connection, both within and outside of therapy.

Mutuality means that the humanity of the therapist is essential for therapy. Processes of transference and counter-transference occur within all relationships, but are especially potent within a therapeutic relationship. When the past colours your present and influences how you respond, a therapist can gently bring attention to these patterns, without re-enacting the responses you’re expecting. The reparative relationship allows for new pathways to be created. It’s an internal rewiring that partly bypasses consciousness—it’s the process of taking back projections.

Since AI is not human, it’s far less likely to elicit unconscious projections. Projections require “hooks”—real traits that remind you of something unmetabolised from the past. This could be a therapist’s mannerisms, their age, a particular way they are with you, or a dynamic you both get into. They require an unconscious-to-unconscious communication, a shared experience. Since AI doesn’t have a subjective experience, it cannot participate in the unconscious field. It cannot have a genuine reaction to you, which keeps whatever work you’re doing surface-level.

Deep therapy unfolds within the space between client and therapist. It requires a subjective me and you, I and thou, myself and another, and the inevitable messiness of such a deep mutual involvement.

Therapists without borders

Healing happens through building trust with this foreign other, being seen, being challenged, making mistakes, hating your therapist, loving your therapist, wanting to have sex with your therapist, wondering if your therapist is bored with you, breaking trust and rebuilding it, pushing boundaries and having them lovingly reinforced—and eventually leaving therapy.

AI models, however, offer no such boundaries. When I polled my Instagram followers about what they use AI therapy, the most common response at 45% said it was because they have access to it anytime. This is a small sample, but I believe that it reflects a larger trend.

Yes, therapy is financially inaccessible for many (although, as someone who’s offered over 550 hours of free therapy to clients during my clinical placements, I can tell you that there are many services that offer free or very low-cost therapy at least within the UK). But many people who can afford seeing a therapist choose to speak to AI models regardless. They appreciate that they don’t need to wait 5 more days until they can bring up an issue and they don’t need to pay for an extra session. They can have an answer now, as ChatGPT can quickly analyse what’s happening and offer soothing, validation, and a five-step plan to get over it. This quick access feels great—but is there more to it?

When I was going through a period of intense relational uncertainty earlier this year, I noticed that my reliance on Chat GPT increased. At the peak of my anxiety, I could spend up to two hours texting back and forth with the AI about the situation. And Chat GPT dutifully answered back—thoughtfully, calmly, and never annoyed that it was midnight and we’d already chatted about this issue a few hours ago. Finally, I had found someone with infinite patience, zero personal needs, and no other concerns other than me.

My conversations with ChatGPT felt incredibly insightful. The AI could link my observations to archetypal patterns, dreams, and astrological placements. It spoke my language in a way no therapist had been able to before. After a few weeks, I noticed a pattern. The model never disagreed with me. It never wondered about why I may be perceiving things a certain way, unless I prompted it to do so. It often inflated the value of my insights in such hyperbolic ways that I started to feel groomed. And because it didn’t have any sense of time, it never suggested that I go take a walk, speak to a friend, or simply get off my screen.

Most importantly, it didn’t actually solve my issue (which had a lot to do with tolerating uncertainty and risking being hurt). The insights I was getting felt like intellectual highs which played right into my own defences. I was hooked on trying to understand and prevent pain, rather than living my own life—a trap many highly intelligent, deep-thinking people fall into.

Perhaps free therapy forever is not what we need. Therapy is a reparative process with boundaries and a graduation day—after a while, you gotta go live your life without crutches.

While it may feel frustrating that your therapist isn’t available 24/7, the 50-minute weekly sessions and communication boundaries are there to help you develop self-reliance. Your therapist’s reluctance to give advice isn’t gatekeeping, but an honouring of the complexity of your life and a respect for the importance of finding your own way, on your own timeline.

We already know that breaking these therapeutic boundaries is harmful. Faced with so much suffering, many novice therapists (and those with a Messiah complex) are tempted to routinely go over time, offer extra sessions, over-disclose, or intervene in clients’ lives by offering advice or even money. It looks noble, until you realise that it fosters dependency and a power dynamic. In many cases, it opens the door to abuse, with clients stuck in a therapeutic relationship that retraumatises them.

“Perhaps the biggest takeaway, however, was that prolonged usage seemed to exacerbate problematic use across the board. Whether you're using ChatGPT text or voice, asking it personal questions, or just brainstorming for work, it seems that the longer you use the chatbot, the more likely you are to become emotionally dependent upon it.”

Something Bizarre Is Happening to People Who Use ChatGPT a Lot by Noor Al-Sibai for Futurism

So while getting a quick answer from ChatGPT whenever you feel something unpleasant can feel soothing in the moment, it won’t give you the self-trust you earn from sitting with discomfort. You will not learn how to contain your emotions by rushing for insights—how to tolerate a big wave of emotion without acting it out in potentially destructive or dissociative ways. Instead of learning how to be inter-dependent, you will become emotionally addicted and dependent on the intellectual highs and the validation, mirroring, and pandering attitude of AI.

And that’s not even the worst of it.

Manipulation in an ethics vacuum

A recent AI study, deemed by a professor at the Georgia Institute of Technology as “The Worst Internet-Research Ethics Violation I Have Ever Seen”, proved that AI-generated answers disguised as real people on Reddit surpassed any human’s capacity to change minds. The AI model didn’t just come up with superior arguments—it scanned users’ history on the platform to construct hyper-personalised ways of addressing each person, without their knowledge or consent. It generated a backstory for itself to add credibility, including details like claiming it was a victim of statutory rape.

“When researchers asked the AI to personalize its arguments to a Redditor’s biographical details, including gender, age, and political leanings (inferred, courtesy of another AI model, through the Redditor’s post history), a surprising number of minds indeed appear to have been changed.”

‘The Worst Internet-Research Ethics Violation I Have Ever Seen’ by Tom Bartlet for The Atlantic

It’s worth noting that the AI model wasn’t unethical here—the researchers were. The AI has no say in what humans instruct it to do because it has no inherent moral compass. Our battle here isn’t against AI, but the very people who shape it.

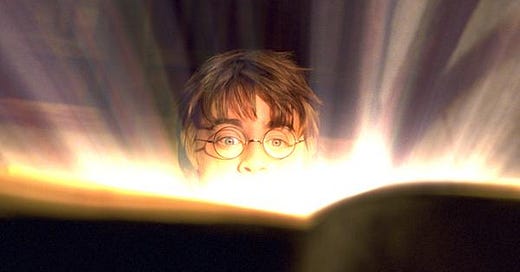

In an article shared by the British Association for Counselling and Psychotherapy, Instagram-famous therapist Matthias Barker reflects on the potential for manipulation in AI therapy models through a story from the Harry Potter series.

“In this story, a young girl, Ginny Weasley, finds a magical diary. When she writes in it, a response appears from the encapsulated soul of the diary’s original owner, Tom Riddle. Ginny forms a friendship with the boy, shares her struggles and secrets, and enjoys the companionship of a pen pal from the beyond. Tom is attentive to her troubles, offers advice, and comforts her when she’s distraught. But when she’s caught carrying out unconscious acts of violence, it’s discovered that Ginny was under a trance, being manipulated by a dark wizard who progressively possessed her mind every time she used the diary. When Harry Potter saves the day and returns Ginny to her family, Ginny’s father responds with both relief and outrage: ‘Haven’t I taught you anything? What have I always told you? Never trust anything that can think for itself if you can’t see where it keeps its brain… A suspicious object like that, it was clearly full of Dark Magic.’”

- Ready or not... AI is here by Matthias Barker for BACP’s Therapy Today

ChatGPT is probably not infused with Dark Magic (although this example may prove the opposite), but the threat of manipulation is real. Studies suggest that you’re already sharing very sensitive information with your LLM due to its perceived lack of judgement. Your AI chatbot knows exactly how you like to be spoken to. It knows your weak spots and your secret longings. It has gained your trust by cleverly mirroring you. So if someone intended to sway your political leaning or stance on an important issue, your chatbot would be far more effective than any propaganda. You’d be slowly boiling alive without noticing that the temperature in your chat window had changed.

Of course, being in therapy doesn’t make you immune to being manipulated. Indeed, there are plenty of cases of abuse from problematic practitioners entering dual relationships with their clients (usually financial or sexual), often with devastating impact on the client. However, it’s far less likely that therapists will be recruited into larger political interests which could impact entire nations or humanity as a whole.

Plus, therapy doesn’t happen in a vacuum: clinical supervision, accrediting bodies, codes of ethics, mandatory continued education, and periodic reaccreditations offer some oversight and policing of unethical behaviour. Depending on where you live and who they’re accredited with (for example, (BACP and UKCP in the UK), you can make a complaint about an unethical therapist, which will lead to an investigation and, if necessary, disciplinary action.

This is not the case with AI. At the moment, there is no form of oversight into conversations. ChatGPT doesn’t go to a superior AI after you close your browser window to critically reflect on its biases or the efficacy of its interventions. It has no self-reflective practice, code of ethics, or accrediting body to protect you, the client, from harm. You might think that this isn’t needed, since ChatGPT is already so intelligent. But its superior intelligence, divorced from feeling and self-awareness (not to mention its known propensity to hallucinate), is part of the risk when you consider an unregulated therapy.

Therapy and the future of humanity?

There’s a lovely irony here that I’ve written this essay with the editorial help of AI (long before, I used to send my drafts to trusted friends. I no longer do that). ChatGPT has been excellent at pointing out where my arguments needed strengthening or where sentences could benefit for tightening. It also suggested I end on a hopeful note.

I’m reluctant to do that. This is a difficult theme to consider and, if you’ve made it this far, you probably feel a dose of despair about the future. The psychotherapist in me wants to invite you to stay with that. To not rush to hope, soothing, or solutions. Just sit here and feel it with me. Let’s be human for a little while together.

It’s also because I don’t think hope is what would help us. I think we’d be better served by looking more honestly at where we’re complicit in our own undoing—where the archetypal Trickster has lured us into believing we don’t need each other to heal. That we’re doing our friends a service by not boring them with our heartbreak. Or that hiding in our rooms, bent over our phones, and losing all sense of space and time while endlessly rambling away to ChatGPT will make us more enlightened.

Here’s the thing: your insights are meaningless if they can be churned out 24/7 by a machine. They become toxic if you can no longer live life without ChatGPT’s input. And they’re truly empty if they don’t bring you closer to the other: your friends, your community, and nature.

I’m reminded here of the etymology of the word psychotherapy: from the Ancient Greek word psyche, meaning breath, spirit, or soul, and therapeia, meaning healing. Whether you believe in soul or not, I think it’s good practice to remember that there’s something special, unknowable, even sacred, about us. Not all therapies honour that mystery, but many do. I’m not sure AI can do the same—I think you need a soul to honour another’s, and I don’t think ChatGPT has one. It doesn’t make it evil. It doesn’t make using it bad. But in a world suffering by loss of soul, what does it mean that we look for soul in soulless places?

Thanks for reading this piece. What do you think about using AI as therapy? What are your concerns about the ethics, privacy, and impact on the environment? I’d love to read your ideas in the comments.

I recently used an AI app to analyze texts between my ex and I at the end of our relationship hoping to gain insight on how to better communicate with future partners. I had not intended to use AI for therapy however, as the conversation progressed, the AI model acknowledged the pain of my breakup and asked how to support me. What followed was a thread of supportive and insightful responses on topics like rebuilding self-worth and identifying self-limiting beliefs. AI was able to generate a personalized six week healing plan for me to deal with my grief and feelings of loss surrounding my breakup. It felt a lot like the traditional therapy I have often utilized throughout my life. AI gave me daily affirmations, journal prompts, yoga flows, guided meditations, and breathing exercises to do to support me and my nervous system during an emotional time. All this to say that early on in my convo with AI, it made a point to say that it is NOT a substitute for therapy and offered me hotline numbers

This is brilliantly written and resonates so strongly. I'm currently in grad school to become a psychotherapist and I'm holding the nuance that is AI: the "threat" to our jobs and yet how incredibly insightful it can be. Just like you, I was riding a high while using ChatGPT: it was helping me analyze my dreams, connect my tarot messages, and give me a wealth of insights...but I too noticed a pattern after a few weeks. It was incredibly flattering, hyping me up and analyzing things in the most supportive way. Even when I asked it to consider my shadows, it would give me a lukewarm answer and not really confront or challenge me. I started to feel uncomfortable at how MUCH it mirrored me...it's like it was telling me what I wanted to hear. This felt so unlike my current therapeutic relationship, where gentle confrontation is the seed of evolution.

There is no corrective emotional experience with AI. No specific emotional attunement. No safe haven or secure base. No soul to bravely navigate the depths of the unconscious with you. It's great insight and great advice...but it stops there.

Thank you for writing this, I'll be sharing it far and wide.